Quick Read

- Artificial intelligence (AI) is rapidly accelerating the speed and complexity of cyberattacks, particularly in industrial operational technology (OT) environments.

- Attackers leverage AI for automated reconnaissance, targeted phishing, and sophisticated malware creation, significantly lowering the barrier for complex threats.

- In 2025, ransomware actors posted 7,819 incidents to data leak sites globally, with the U.S. being the most targeted nation.

- AI-driven attacks are shifting from immediate disruption to subtle, persistent operational degradation, making detection harder.

- Traditional security controls and even zero-trust principles struggle to keep pace with adaptive, AI-assisted adversaries due to structural and organizational gaps between IT and OT.

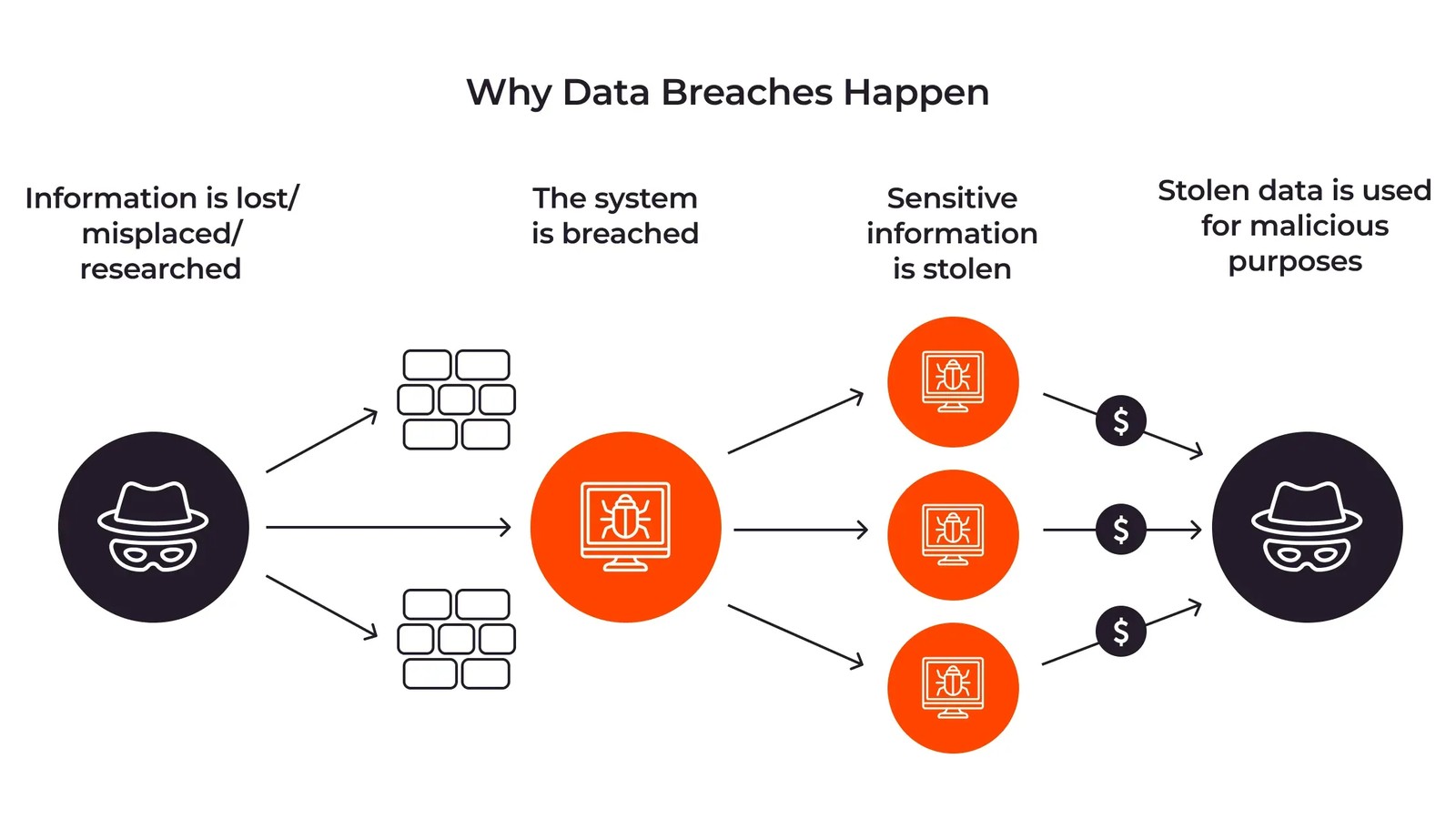

YEREVAN (Azat TV) – A data breach, defined as an incident where sensitive, protected, or confidential data is accessed or exfiltrated by unauthorized parties, is undergoing a profound transformation. Artificial intelligence (AI) is rapidly accelerating the speed, scale, and sophistication of cyber threats, fundamentally reshaping how these breaches occur, particularly within critical industrial operational technology (OT) environments, according to recent analysis from cybersecurity experts. This shift means that what once required specialized teams and lengthy development cycles can now be achieved in minutes, making traditional defenses increasingly challenged.

The implications are far-reaching, as AI lowers the barrier to entry for less sophisticated adversaries, enabling them to execute highly complex cyber-attacks. Data from ecrime.ch revealed a staggering 7,819 ransomware incidents posted to data leak sites in 2025 alone, with the United States bearing the brunt of nearly 4,000 attacks. This surge underscores the immediate and evolving threat landscape.

AI’s Role in Accelerating Cyber Attack Lifecycles

Experts emphasize that AI is predominantly functioning as a ‘sophisticated technical force multiplier’ rather than an autonomous digital soldier. Fernando Guerrero Bautista, an OT security expert at Airbus Protect, noted that AI is practically applied in reverse-engineering proprietary industrial protocols and generating highly targeted spear-phishing campaigns that mimic the technical lexicon of substation operators. This capability significantly speeds up human-driven activity, such as automating reconnaissance, crafting functional exploit code, and creating sophisticated malware frameworks.

Paul Lukoskie, senior director of threat intelligence at Dragos, highlighted that AI drastically lowers the entry barrier for adversaries, allowing them to scale initial intrusion tactics like social engineering. AI can also assist in discovering vulnerabilities, writing code to bypass endpoint detection tools, and optimizing attack paths once a foothold is established. A validated example from 2025, the GTG-2002 and GTG-1002 campaigns, reportedly involved attackers using Anthropic’s Claude Code to automate multiple layers of intrusion, including reconnaissance, vulnerability scanning, lateral movement, and credential theft.

Eric Knapp, product manager at Nozomi Networks, reiterated that AI empowers attackers across the entire attack lifecycle, from planning and reconnaissance to data exfiltration and execution. He stressed that humans remain the weakest link, and AI is designed to exploit these vulnerabilities relentlessly, leading to a growing arsenal of zero-day exploits.

The Evolving Nature of Industrial Data Breaches

The impact of AI-driven attacks is also evolving. Instead of immediate, disruptive incidents like blackouts, AI enables ‘subtle, persistent operational degradation,’ as described by Bautista. Attackers can use AI to mask minute manipulations of voltage regulation or chemical mixtures, mimicking normal equipment aging or grid noise. These changes are designed to undermine long-term reliability and profitability without triggering safety systems, transforming a cyber incident into an economic siege.

Steve Mustard, an independent automation consultant, noted that AI attacks can cause economic harm, erode safety, or undermine confidence rather than immediate disruption, making them harder to detect. Dennis Hackney, vice-chairperson of the ISA Global Cybersecurity Alliance (ISAGCA) Advisory Board, identified data exfiltration as the primary goal of AI-based cyber events to date, with stolen data including critical infrastructure details, accounts, and passwords. Concerns are also growing exponentially regarding AI model poisoning, which could impact predictive maintenance, optimization, and digital twins.

Challenges for Traditional Defenses and Zero Trust Principles

Existing OT security controls are struggling to keep pace with these adaptive, AI-assisted threat actors. Many traditional systems are signature-based, only recognizing ‘known’ bad patterns. However, AI-assisted threats are polymorphic, adapting their behavior in real-time to blend with legitimate industrial traffic. Bautista identified the ‘Context Gap’ between IT and OT security teams as a significant structural weakness. Security teams understand data packets but often lack knowledge of industrial physics, while plant operators understand the physics but may miss cyber anomalies masked as process fluctuations.

While zero trust principles—involving microsegmentation, strict authentication, and least-privilege policies—can slow lateral movement and reduce exposure, they are not a panacea for OT environments. Lukoskie pointed out that zero trust is often unrealistic for many OT asset owners due to legacy systems and proprietary protocols that lack built-in security. Furthermore, industrial organizations prioritize availability and safety, and zero trust’s continuous monitoring could impact system efficiency.

Mustard underscored that strong identity controls, network segmentation, and least-privilege access offer real value against AI-assisted adversaries. However, once attackers gain legitimate access, AI can help them blend in more effectively rather than bypass controls outright. Hackney added that traditional security operations, relying on signature-based or anomaly-based systems, cannot keep up with the rapid and unpredictable pace at which agentic AI can alter tactics and technologies.

The acceleration of cyber threats by AI demands a fundamental rethinking of industrial cybersecurity, moving beyond static defenses to embed continuous learning and bridge the critical operational understanding gap between IT and OT, signaling a new era where resilience is defined by adaptive defense strategies.